By Jonathan Tan, PhD candidate, Western University

The power of AI

Modern artificial intelligence (AI) systems have the power to make accurate predictions for protein structures1, can be used to evaluate chess boards critically2, can believably converse with humans3, and can generate video from simple text prompts4. The underlying machinery of these systems are neural networks, which can comprise millions to billions of simple nodes. Individually, these nodes perform a simple mathematical operation. One would not think we could get such valuable and powerful AIs from that. However, these simple building blocks produce such emergent behaviours through repeated training on data.

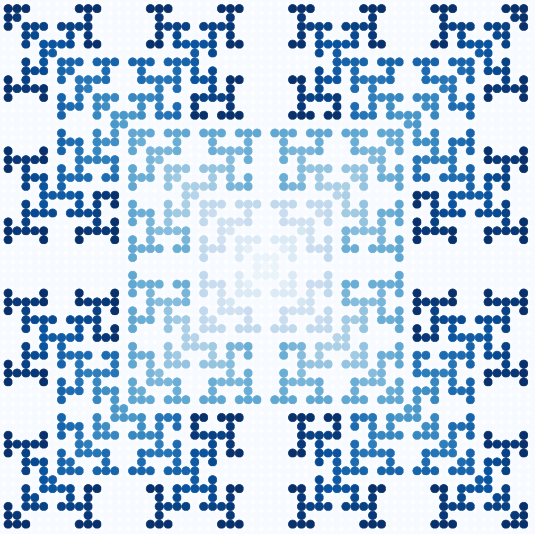

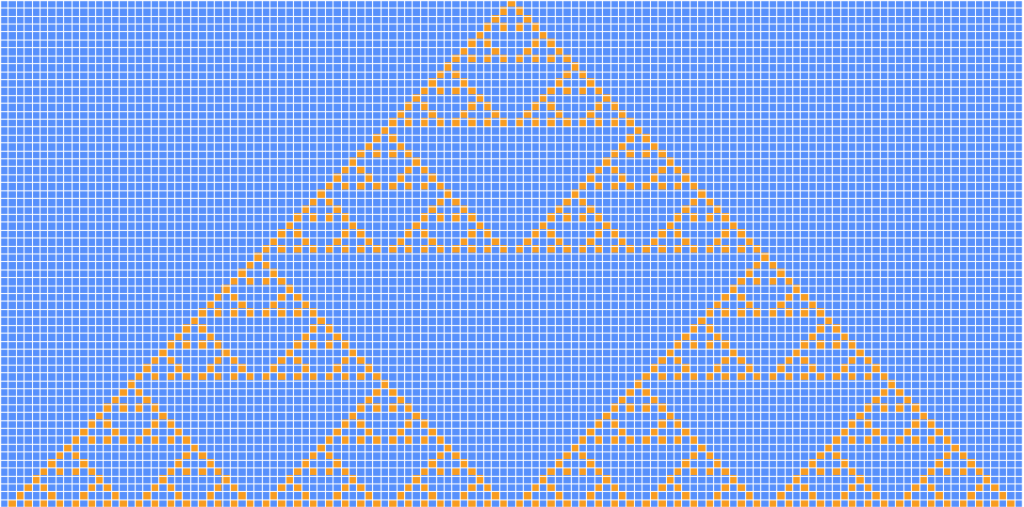

Cellular automata

Cellular automata are systems comprising grids of cells in various states. The states change based on rules, which can be extremely simple, yet can model complex systems such as traffic jams and forest fires5. Both cellular automata and artificial intelligence create complexity from simple elements and enhance our capability to model and learn from the world around us.

Cellular automata modelled with Python

This notebook summarizes and explores the chapter on iterated growth in Paul Charbonneau’s book Natural Complexity5. In it, we are introduced to cellular automata, both one- and two-dimensional, and visualize how complex patterns emerge from simple rules. In it, we are introduced to cellular automata, both one- and two-dimensional, and visualize how complex patterns emerge from simple rules. The notebook breaks down the code, explaining the process to simulate these cellular automata step-by-step, and can be understood and appreciated by a high-school audience with limited programming knowledge. Additionally, the notebook produces interactive simulations, allowing students to explore the chapter themselves, and it can even be modified, with minimal coding, to create new visualizations to dive deeper into the concepts presented in the chapter.

See the Python code

Binder

Google CoLab [>18 years old or district license]

References

- Jumper, J., Evans, R., Pritzel, A., Green, T., Figurnov, M., Ronneberger, O., Tunyasuvunakool, K., Bates, R., Žídek, A., Potapenko, A., Bridgland, A., Meyer, C., Kohl, S. A. A., Ballard, A. J., Cowie, A., Romera-Paredes, B., Nikolov, S., Jain, R., Adler, J., … Hassabis, D. (2021). Highly accurate protein structure prediction with AlphaFold. Nature, 596(7873), 583–589. https://doi.org/10.1038/s41586-021-03819-2

- Erly, A. (2020). Stockfish+NNUE, Strongest Chess Engine Ever, To Compete In CCCC. https://www.chess.com/news/view/stockfishnnue-strongest-chess-engine-ever-to-compete-in-cccc

- Leiter, C., Zhang, R., Chen, Y., Belouadi, J., Larionov, D., Fresen, V., & Eger, S. (2023). ChatGPT: A Meta-Analysis after 2.5 Months (arXiv:2302.13795). arXiv. http://arxiv.org/abs/2302.13795

- Blattmann, A., Rombach, R., Ling, H., Dockhorn, T., Kim, S. W., Fidler, S., & Kreis, K. (2023). Align Your Latents: High-Resolution Video Synthesis With Latent Diffusion Models. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 22563–22575.

- Charbonneau, P. (2017). Natural complexity: A modeling handbook. Princeton University Press.